Object Detection using Machine learning

This project aims to develop an object detection system using state-of-the-art models and datasets to identify and locate objects within images.

Technologies and Tools

TensorFlow: The deep learning framework will be used for model development, training, and evaluation.

LabelImg: This tool will be employed for image annotation and labeling.

Roboflow: Used to generate TensorFlow records for efficient data ingestion.

EfficientDet: Implementation of the EfficientDet model for object detection.

SSD MobileNet v2: Implementation of the SSD MobileNet v2 model.

SSD MobileNet v2 FPNLite: Implementation of the SSD MobileNet v2 FPNLite model.

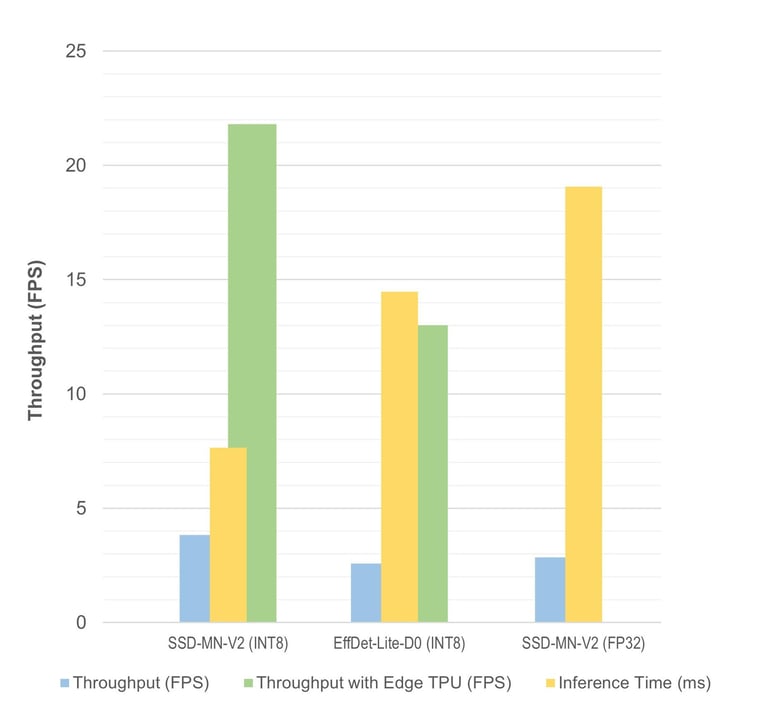

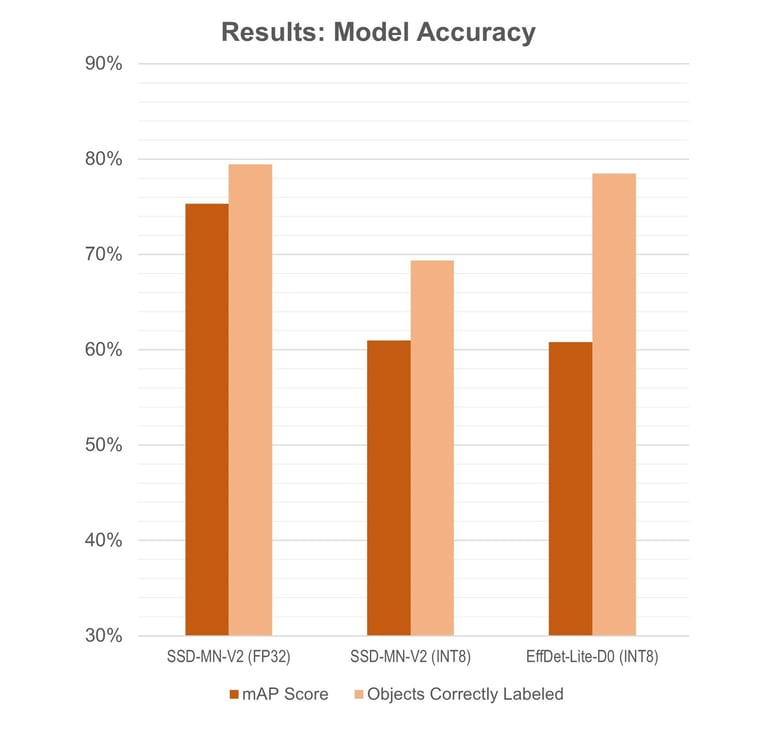

Here’s a summary of each model’s performance based on the data above.

SSD-MobileNet-v2: With an inference time of 68.96 ms and a COCO mAP score of 60.99%, the quantized version of this model strikes a good balance between speed and accuracy. The floating point version can be used for a decent boost in accuracy with only a slight reduction in speed.

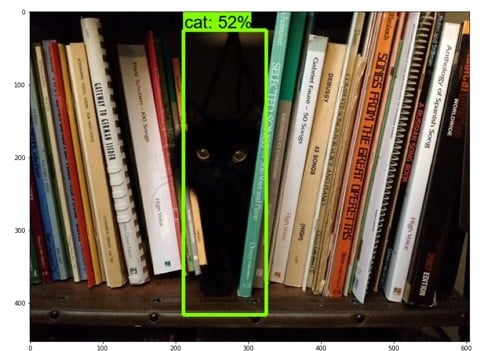

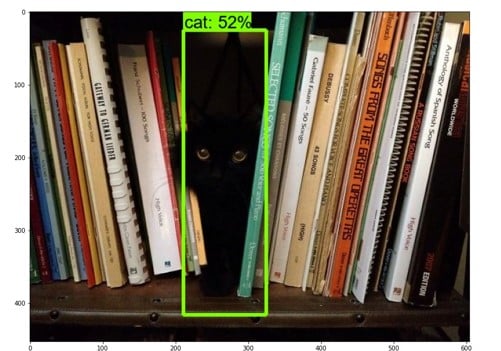

SSD-MobileNet-v2-FPNLite: It’s not quite as fast as regular SSD-MobileNet-v2, but it has excellent accuracy, especially considering the small size of the training dataset. It’s able to successfully detect 306 out of the 335 total objects in the test images. While the total number of objects detected is only slightly higher than EfficientDet-Lite-D0, it generally had 90% - 99% confidence in its predictions, while the EfficientDet model confidences ranged widely between 50% - 95%.

EfficientDet-D0: This model is slow and performed inaccurately on this dataset. The poor accuracy is likely due to the limited size of the coin dataset I used for training. Theoretically, this model should be able to achieve higher accuracy than any other model in this list. It should only be used in applications with higher compute power and where a larger training dataset is available.

Conclusions

Here are my top recommended models based on the results of this test.

First Place: SSD-MobileNet-FPNLite-320x320

This model has exceptional accuracy (even when trained on a small dataset) while still running fast enough to achieve near real-time performance. If you need high accuracy and your application can wait a few hundred milliseconds to respond to new inputs, this is the model for you.

Why is this model my favorite? Generally, accuracy is much more important than speed. This is especially true when creating a prototype that you want to demonstrate to stakeholders or potential investors. (For example, it’s more embarrassing when your Automated Cat Flap prototype accidentally lets in a raccoon because it was mis-identified as a cat than when it takes three seconds to let in your cat instead of two seconds.) SSD-MobileNet-FPNLite-320x320 has great accuracy with a small training dataset and still has near real-time speeds, so it’s great for proof-of-concept prototypes.

SSD-MobileNet-v2

The SSD-MobileNet architecture is popular for a reason. This model has great speed while maintaining relatively good accuracy. If speed is the most crucial aspect of your application, or if it can get away with missing a few detections, then I recommend using this model. The low accuracy can be improved by using a larger training dataset.

EfficientDet-Lite-D0

This model has comparable speed to SSD-MobileNet-FPNLite-320x320, but it has worse accuracy on this dataset. I recommend trying this model if you aren’t achieving good accuracy with the SSD-MobileNet models, as the different architecture may perform better on your particular dataset. Note that larger (and thus more accurate) EfficientDet-Lite models are trainable through TFLite Model Maker, but they will have much slower speeds.

Overview

Object detection is a fundamental task in computer vision with numerous applications, such as autonomous driving, surveillance, and image analysis. We will explore three different models - EfficientDet, SSD MobileNet v2, and SSD MobileNet v2 FPNLite - trained on three distinct input image sizes (512x512, 320x320, and 640x640) to compare their performance and efficiency.